VTubers, also known as virtual YouTubers, use motion capture to create a real-time digital avatar that mimics their facial expressions and body movements. This guide will show you precisely what you’ll need to start VTubing. VTuber setups are about as diverse as the setups of regular Youtuber creators. The route you choose depends on your budget, content, and the kind of ‘look’ you want for your channel.

This guide covers:

- The most common setups for non-animators who are new to VTubing

- What different VTubing configuration cost

- What workflow you’ll need to create your avatars in different software

- Free VTuber software

- How VTubers achieve full-body motion capture

- How to stream your avatar to various platforms in real-time

First, do you know what kind of VTuber you want to be?

The first thing to consider is how you’re planning to build your VTuber setup:

- As an independent creator: You’re in complete control of your avatar. You’re the pilot, operator, voice actor, and chat moderator! It can be especially stressful as you’re also live-streaming most of the time. However, you have total creative control and can create any world you can imagine. In some cases, creators band together into small indie teams and divide the workload.

- As an agency: Agency VTubers are almost like regular actors. The ‘VTuber’ is a digital character managed by a team of people while an actor performs the vocals and movements. Agencies will also manage the VTubers marketing, social media, etc.

Tip: If you’re an English VTuber who wants to get to the next level, take a look at the English-language agency VShojo — they provide the support, management, and outreach you need.

We’ll look exclusively at the kind of setup you need to be an independent creator. This in-depth guide should give you a good idea of what hardware to buy, what software to use, and how to start streaming.

How much do I need to spend on my VTuber setup?

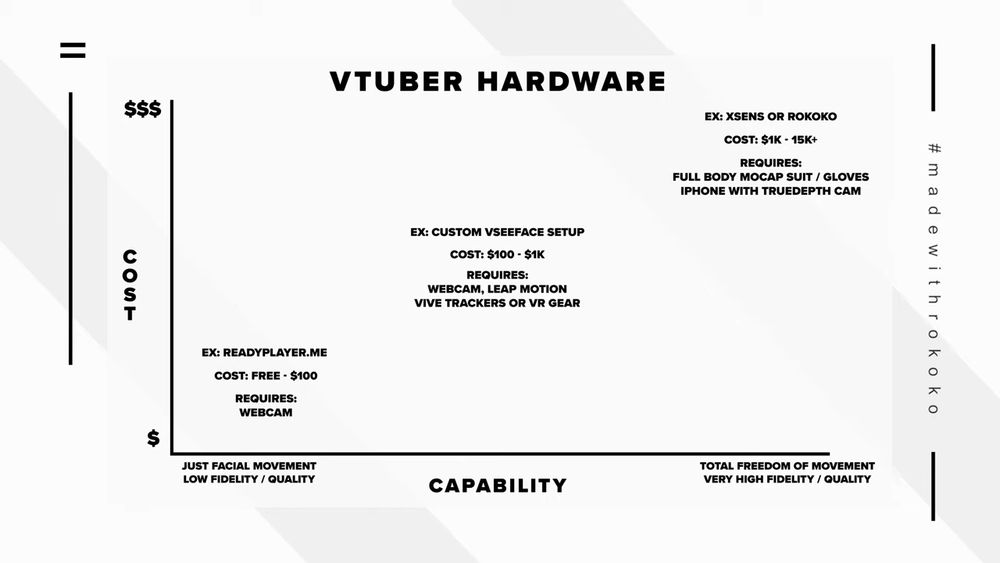

The important thing to remember is that VTubing can be totally free or cost thousands of dollars. It depends on the quality and range of motion capture you want and your character development costs. If you’ve got a half-decent computer with a webcam, you’re already on the right track. It’s feasible to spend $0 and still stream an animated character with fairly good facial movement. However, if you want better animation fidelity and a full range of motion, you can expect to spend around $4000 - $15,000 on hardware and software.

There are three levels of motion capture animation that you can achieve as a VTuber:

- Low fidelity facial movement (costs between $0 and $100 per month)

- Mid-level facial animation and better tracking software for your body (costs between $100 and $1,000 and includes solutions like the Leap Motion Controller)

- High fidelity animation for full-body performance capture, including finger and hand tracking (costs between $4,000 and $15,000). For example, the famous VTuber Miko uses a custom Xsens setup that reportedly costs around $13,000 for the hardware alone.

What you need to know when choosing a VTuber avatar

Some entry-level VTuber software will provide you with a large collection of free avatars to choose from. Some even allow you to customize your avatar with a few sliders. This is great when you’re just starting out, but if you want to break away from the standard designs, you’ll need to create your own model.

It’s unlikely that you will find a VTuber model on asset marketplaces like Tubosquid, as most of those models are not rigged for motion capture and don’t have the correct blendshapes. You have three options:

- Use a sophisticated VTuber maker like Vroid Studio, Daz3D, MetaHuman Creator, or Character Creator 3. Keep reading for more info on these avatar creators.

- Have your character custom commissioned from a reputable 3D artist. There are plenty on Fiverr and other marketplaces.

- Create a character yourself in Autodesk Maya or a similar application.

Note: This guide won’t dive into custom character creation in programs like Maya because it’s a pretty advanced subject. It takes years to learn how to sculpt good 3D models. To create your own model in Maya or with an avatar builder, remember specific blendshapes are required for VTuber models as most facial motion capture solutions use Apple’s ARKit and TrueDepth camera. You’ll have to stick to their standard 56 blendshapes to make your character compatible with VTuber software.

Popular VTuber avatar makers

An avatar creator is a great shortcut for the creation of your character model. Just remember that these aren’t plug-and-play. You’ll still need to make adjustments and, in some cases, create blendshapes.

- For anime characters, use Vroid Studio or Daz3D.

- For realistic human characters, use Metahuman Creator or Character Creator 3.

- For non-human and Pixar-style characters, use Daz3D.

VTuber workflow for Vroid Studio

Vroid Studio allows you to create classic anime-styled characters. It’s completely free and has a marketplace of community-made elements; you can browse those VRM models here. The first step to building your character is to choose one of the default models and start customizing. There are a ton of options, everything from ear shape, skin color, hair, and even length of legs and torso. The software will automatically add spring bones to your model’s hair and clothing to get an excellent range of motion. Just export your avatar in a T-pose, and your model is 90% of the way there. All that’s left is adding ARKit Blendshapes and retargeting animation onto the model. Check out the video below to learn more about the process for a VTuber workflow for Vroid Studio:

VTuber workflow for Metahuman Creator

Metahuman Creator is one of the most talked-about software releases of the decade. It’s built up a tremendous amount of hype and, despite being in beta, pretty much delivers what Unreal Engine and Epic Games promised: Realistic humans rendered in real-time. For free. You can use Metahumans to create a human-like avatar that looks similar to yourself or completely different. It’s got customization options for everything — even the iris size can be customized.

Your metahuman will be hosted in the cloud on Pixel Bridge, Unreal Engine’s asset management software, so there’s no actual asset export that needs to take place. All you need to do is download your character, load it into your scene, and you’re good to start retargeting your motion capture. Check out the video below to learn more about a VTuber workflow for Metahuman Creator:

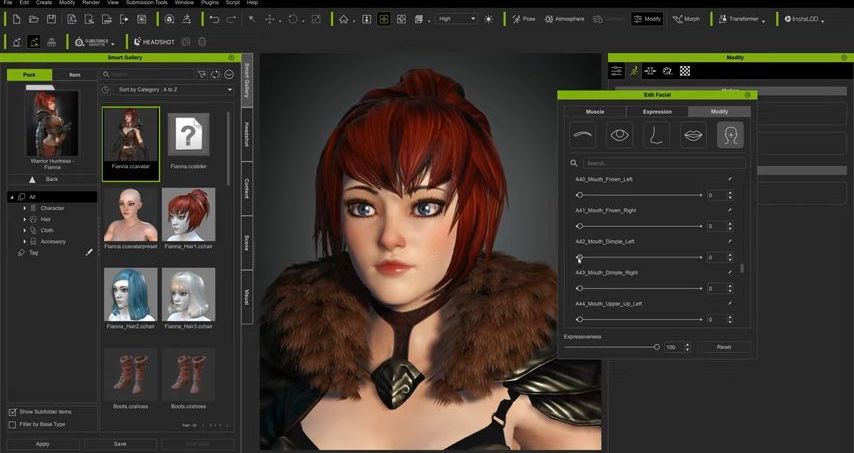

VTuber workflow for Character Creator 3

Built by Reallusion, Character Creator 3 is a very similar concept to Metahumans. CC3 is paid software, and it also has a bunch of paid add-ons for extra functionality. There’s even a neat add-on that enables you to upload a photo and create an approximate digital double of a person. It comes with even more options to customize your character than MetaHuman Creator — but has a few tricky elements. For example, you need to purchase the pipeline extension to export your character. CC3 comes with a 30-day free trial which we recommend using before fully committing to the software. Once imported to Unreal Engine using the auto importer, it’s relatively straightforward to retarget your animation. Checkout the process for a VTuber workflow with Character Creator 3:

VTuber workflow for Daz3D

Daz3D is similar to CC3 in functionality. You can create digital doubles, easily customize your avatar, and more. But there’s one big advantage to Daz3D; its large marketplace of non-human characters. From animals to teddy bears, there’s an extensive library of character options. Checkout the process for a VTuber workflow with Daz3D.

Free VTuber software that’s all-in-one

Once you’ve got an avatar, you need to import it into software that can retarget the motion capture data and render your character in real-time. The three most popular free tools that VTubers use to create content are VUP, Vtube Studio, and Amimaze by Facerig. All three are free to use and have paid versions. If you want to upgrade to higher quality facial motion capture, full-body performance capture, and maybe even finger capture, we recommend using Unreal Engine due to its powerful render engine and all-around stability. The software is still totally free to use; it just has a much steeper learning curve.

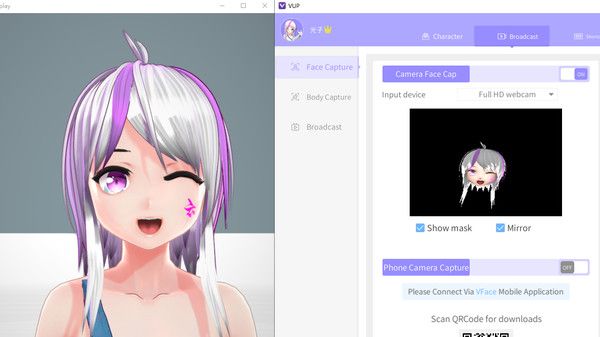

VUP

VUP is a popular program for people making a VTuber model in an anime character style. It allows you to customize existing avatars, upload custom builds, and record facial mocap with your webcam or phone. It’s free to use, plus you can import motion capture data from additional tools like motion capture gloves. VUP also supports 2D VTubers using 2D Live models.

Vtube Studio

VTube Studio is another software with plenty of anime-styled characters. However, it only deals with live2D models.

Animaze

Animaze is free software that’s a great option for people in search of the easiest way to start a live broadcast today. You can create custom avatars, buy community models, and even upload your own characters from external software. You can also find many animal models or models from popular games. It’s free to use and can import motion capture data from a limited number of mocap tools.

Full-Body VTuber Setup using Rokoko

Full body performance capture will record the motion of your entire body. It’s significantly more advanced than the all-in-one options highlighted above — but is the best choice if you want to output high-quality content. This setup assumes that you’re using fairly powerful computer equipment that exceeds the minimum requirements for running 3D applications. You’ll also need an iPhone X or higher at your disposal. You do NOT need a big studio space or a greenscreen as Rokoko works using inertial motion capture technology and not optical.

Here’s the hardware you need:

- Rokoko Smartsuit for body tracking

- Rokoko Smartgloves for finger tracking

- Rokoko Face Capture or a similar solution for facial tracking with your iPhone (iPhone X or higher, as they have a TrueDepth Camera)

- A laptop or computer, Mac or Windows, to run Rokoko Studio

For more in-depth setup details, check out this Youtube Series. Be aware that there are a few minor networking considerations when using an inertial motion capture solution.

The software you need assuming you already have a 3D character and will be streaming with Unreal Engine:

- Rokoko Face Capture or a similar solution for facial tracking with your iPhone (iPhone X or higher, as they have a TrueDepth Camera)

- Rokoko Studio to capture all the mocap in real-time

- Rokoko Unreal plugin to record or stream the mocap directly into your 3D scene in Unreal

- Unreal Engine to create your scene and render it in real-time using a virtual camera

- OBS or another screen recorder to capture your viewport and stream to twitch etc.

So how do VTubers stream to Twitch or Youtube?

Once you're piloting your digital avatar in Unreal Engine, it’s pretty easy to get it onto a stream. A virtual streamer follows the same process as a regular streamer — you broadcast your screen with a video overlay.

If you’ve ever used any kind of streaming software, then you probably know about Open Broadcaster Software or OBS for short. OBS is free, open-source software for video recording and live streaming that works by simply capturing your display. To overlay your character on a video or a game, you’ll need to set up a green screen in Unreal. You can use the tutorial below to see exactly how to achieve that effect. This tutorial will teach you how to use OBS and a green screen in Unreal Engine to isolate your 3D character:

Take your VTubing to the next level with full-body motion capture

Looking for more information on Rokoko’s motion capture solutions and how it works for VTubers? Book a demo here.

Frequently asked questions

Read more inspiring stories

Book a personal demonstration

Schedule a free personal Zoom demo with our team, we'll show you how our mocap tools work and answer all your questions.

Product Specialists Francesco and Paulina host Zoom demos from the Copenhagen office